OpenAI's pivot away from humanity

How the company has recently started to push a geopolitical narrative

AI policy is a nascent area of public policy and there still is a lot of potential to shape the terms of the debate. Starting with an op-ed in the Washington Post back in July, OpenAI CEO Sam Altman has sought to reframe the AI policy discussion as a struggle between democracies and authoritarian regimes. He argued that the dizzying pace of AI system development means that we need to make a strategic choice about the world we want to live in: “Will it be one in which the United States and allied nations advance a global AI that spreads the technology’s benefits and opens access to it, or an authoritarian one, in which nations or movements that don’t share our values use AI to cement and expand their power?”

OpenAI’s original constituency

To a casual Washington Post reader, this may be quite a sensible question and I also would much prefer to live in a democratic than in an authoritarian world. The op-ed however represents a radical break from OpenAI’s messaging throughout the last decade. Ever since its founding in 2015, OpenAI’s mission has been to develop artificial general intelligence to “benefit all of humanity”. Altman has on numerous occasions spoken about how AGI will enable humanity to maximally flourish. With this recent shift however, its new public commitment is to ensuring that a “democratic vision for AI prevails”, driven by a "U.S.-led coalition of like-minded countries". In contrast with its earlier and more inclusive narrative, the majority of humanity (over 5 billion people currently live outside democracies) has suddenly been disenfranchised by AI’s market leader.

The new chief lobbyist

The architect of OpenAI’s new Democratic AI frame is not Sam Altman, but Chris Lehane. Around the time of the op-ed, Lehane was announced as the company’s new Vice President of Global Affairs - essentially its chief lobbyist. Chris Lehane has been hugely influential in shaping the American and global political debate, initially by coining “a vast right-wing conspiracy” as the term of choice for opponents of the Clinton White House and later by successfully undermining the regulation of both AirBnB and crypto. A powerful piece in The New Yorker last month documents how Lehane is now setting out to shape the future trajectory of AI policy.

Lehane’s main mission is not to advance OpenAI’s interests directly, but to narrow the terms of the AI policy debate as a whole. Once you accept that OpenAI’s actions are synonymous with the preservation of democracy itself, you will be much more inclined to look the other way when people complain about OpenAI’s alleged theft of copyrighted materials, the environmental costs of training their models, or potentially anticompetitive deals with major Big Tech players. Whilst Altman spoke only last year about how AI development gone bad could mean “lights out for all of us”, the company is now trying to use rising geopolitical tensions between the U.S. and China to drown out the appeals for regulation that most leading AI researchers deem urgently necessary.

Anthropic joins in

Dario Amodei, the CEO of OpenAI’s competitor Anthropic, is an unlikely but early adherent of Lehane’s new frame. In an essay published about two months after Altman’s op-ed, Amodei pitches an “entente strategy”, “in which a coalition of democracies seeks to gain a clear advantage (even just a temporary one) on powerful AI by securing its supply chain, scaling quickly, and blocking or delaying adversaries’ access to key resources like chips and semiconductor equipment.” Interestingly, and despite Anthropic positioning itself as OpenAI’s more safety-minded breakaway, this essay both fuels an arms race between the US and China and reinforces its rival’s overall political strategy.

Responding to the democratic frame

The implications of the new democratic frame for the rest of us in AI policy aren’t immediately obvious. One lesson might be that it is worth thinking twice about accepting a future 100,000 USD OpenAI democracy grant, regardless of how valuable the actual work is that is being funded. Another might be to be vocal about the inherent contradiction at the heart of Lehane’s frame: if, on the one hand, OpenAI is like any other company creating risks for society, it should probably be regulated. If, on the other hand, it is truly essential for the preservation of democracy itself, shouldn’t control over the company be turned over to democratically-elected representatives rather than a nine-person board?

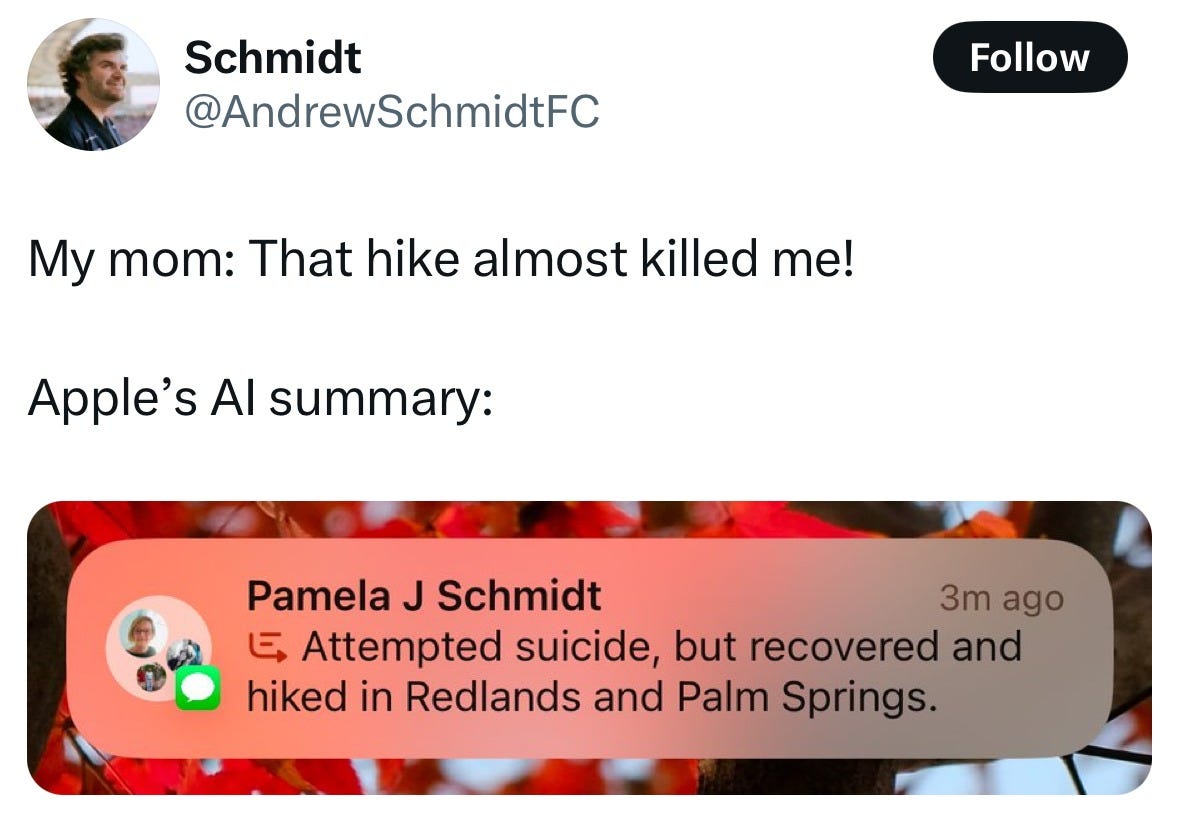

Meme of the month

Final note

Apologies for the slight delay with this month’'s edition, I was travelling a lot over the past weeks. The next edition will come out at its regular time.

If you want to do some more reading in AI policy, do take a look at:

Shakeel Hashim’s timely piece on what the election of President Trump means for AI policy. For one, the Biden Executive Order will likely be replaced by another measure.

An overview of how AI risk may play out exactly by Andrea Miotti over at ControlAI: ‘The Compendium’.

This excellent paper by Heidy Khlaaf, Sarah Myers West and Meredith Whittaker on the overlooked national security risks of using advanced AI for intelligence, surveillance, target acquisition, and reconnaissance.

Great piece, thanks Mark!