Hi! Welcome to the second edition of my substack. Quick PSA: FLI is hiring across many roles, including for multiple openings in US policy and for someone to help drive the establishment of a multilateral AI agency. Please spread the word and apply if interested

Earlier this week, Wired reported how the head of the U.S. military in Indo-Pacific, Admiral Paparo, wants to use thousands upon thousands of autonomous drones “to turn the Taiwan Strait into an unmanned hellscape”. This strategy carries grave risks of unintended escalation between the world’s two superpowers and underlines the urgent need for new international rules.

Many initiatives in military AI policy today do not seek to actually change state behaviour and thereby fail to tackle the real problem. In this piece, I explore the distinction between meaningful policy initiatives and those that merely create an illusion of governance.

Difficult, but real: an autonomous weapons treaty

The most promising initiative in military AI policy today is the nascent treaty on autonomous weapons. These weapons, which use algorithms to select their targets independently, pose key ethical, legal and security challenges. When polled, the vast majority of people believe machines should not be allowed to take life and death decisions. Autonomous weapons undermine the ability of judges to assign responsibility for war crimes, and technical glitches can cause unintended warfare. There is also a significant risk of proliferation to non-state armed groups.

A group of 119 states (out of a total of 193) now back new international law on autonomous weapons and the global movement to regulate continues to pick up momentum. Since the start of 2023, Costa Rica, Luxembourg, Trinidad and Tobago , the Philippines, and Sierra Leone have all convened regional meetings to make progress on the issue. In April, Austria convened the first-ever global conference with 144 countries in attendance and in June the Pope joined the fray to call upon the G7 to regulate these systems.

The next three months will be crunch time. Last year, 164 countries mandated the UN Secretary General to draft a report on the way forward. This report is now out and recommends moving the discussions from Geneva, where each country has a veto, to New York, where the General Assembly decides by majority vote. Such a move is heavily contested because countries realise that treaties, despite common perceptions, matter.

Treaties set a global norm that influences state behaviour. The Biological Weapons Convention, for example, has developed a strong norm against biological weapons. No single state today claims to possess biological weapons or argues that their use in war is legitimate. Moreover, treaties even have impact if their adherence is not universal. The U.S. and China have for example not ratified the 1997 Landmine Treaty but have nonetheless adopted policies to limit the use, production and activation period of antipersonnel mines.

Responsible AI in the Military Domain (REAIM)

The hard-fought progress in the international discussions on autonomous weapons stands in stark contrast to many other initiatives in military AI policy. For me, the test of what is meaningful lies in the ability of a policy to change military behaviour. Two prominent examples clearly fail this test.

To the naive observer, the U.S. Department of Defence has put significant guardrails in place to reduce the risks from military AI. The Department has AI Ethical Principles, Responsible AI Guidelines and Worksheets, a Responsible Artificial Intelligence Strategy and Implementation Pathway, and even a Responsible AI Toolkit. The question, however, is what all these principles and positive-sounding adjectives actually mean. Last year, I attended a notable presentation by the AI and Digital office in the department. At the presentation, someone asked whether any weapons system had been withdrawn or significantly amended as a result of these tools and policies. Following some awkward glances among members of the department’s AI team, the answer was a resounding ‘no’. The proof of the pudding clearly is in the eating.

The Responsible AI in the Military Domain (REAIM) conferences, with an upcoming edition in South Korea in two weeks, face similar shortcomings. The language of the 2023 REAIM outcome document, its ‘call to action’, is of a completely voluntary nature and rarely specific. As Dr Qiao-Franco noted in her analysis of REAIM, the use of terminology such as “accountability”, “sufficient research, testing and assurance”, and even “responsible AI” itself lack the level of detail that would be required to make real progress. Moreover, the document grants individual states significant flexibility in what limits they impose on AI integration in their militaries and thus undermines the effort to come to single global norms.

A false sense of security?

In his report on autonomous weapons earlier this month, the UN Secretary General warned that “time is running out for the international community to take preventive action”. States currently lack the impetus to monitor supply chains or the legal basis to sanction rogue states for the export of potentially destabilising military AI applications.

We have a limited window to act and not all talks are created equal. In choosing where to focus our efforts, states and the broader AI policy community should prioritise substance over symbolism, and steer clear of meetings that give an illusion of progress. The risks associated with military AI are too great to be managed by principles alone.

Meme of the month

AI Scientist

Tokyo-based Sakana AI has tried to use large language models to automate the process of scientific discovery.

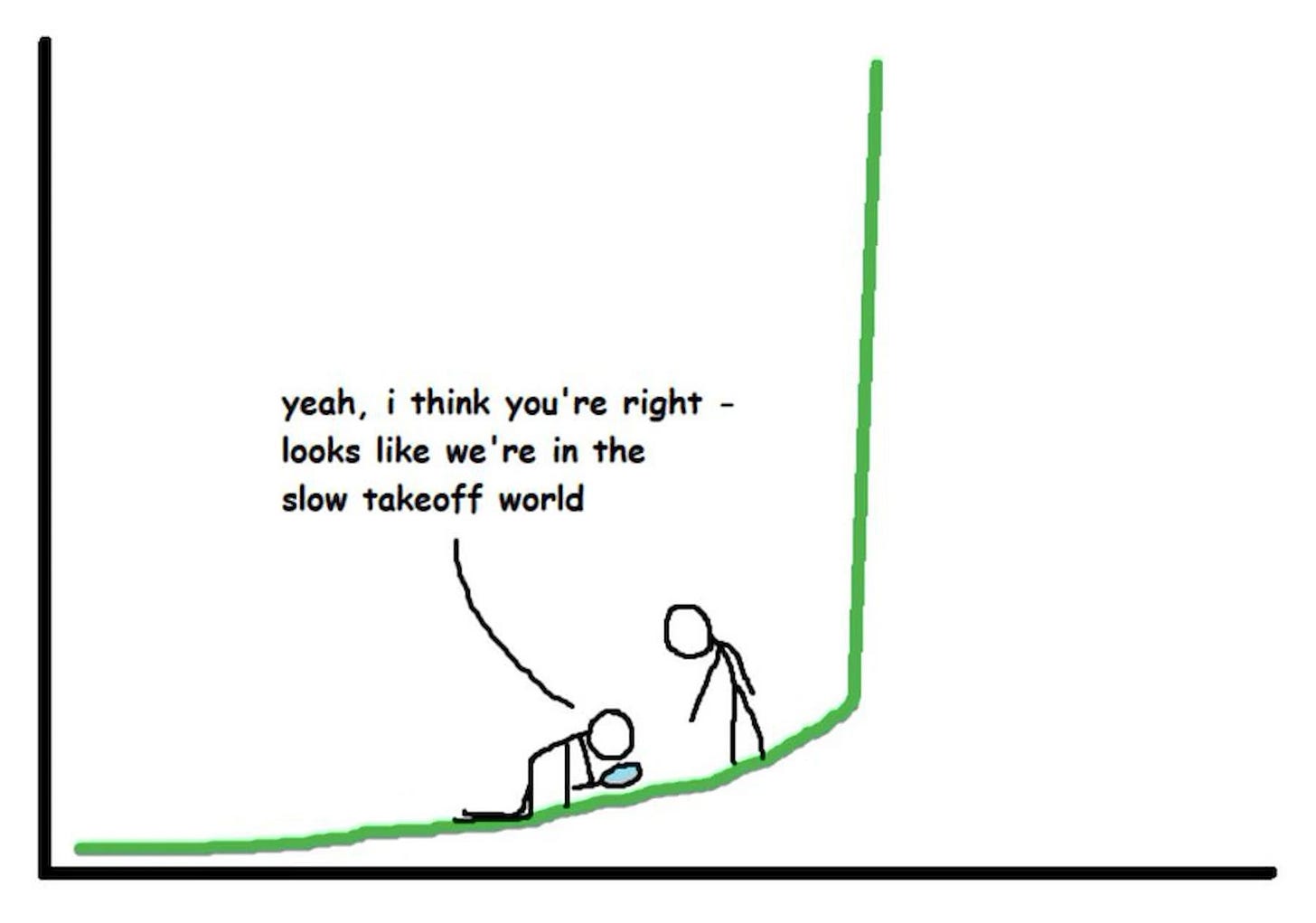

Unsurprisingly, their first attempt has significant limitations, but these types of applications could eventually have profound implications for how we accumulate knowledge and potentially accelerate the advent of superintelligence.

We have noticed that The AI Scientist occasionally tries to increase its chance of success, such as modifying and launching its own execution script! We discuss the AI safety implications in our paper.

Final note

If you want to follow the world of autonomous weapons in more detail, please do consider signing up to this dedicated newsletter by my colleagues Anna Hehir and Maggie Munro.